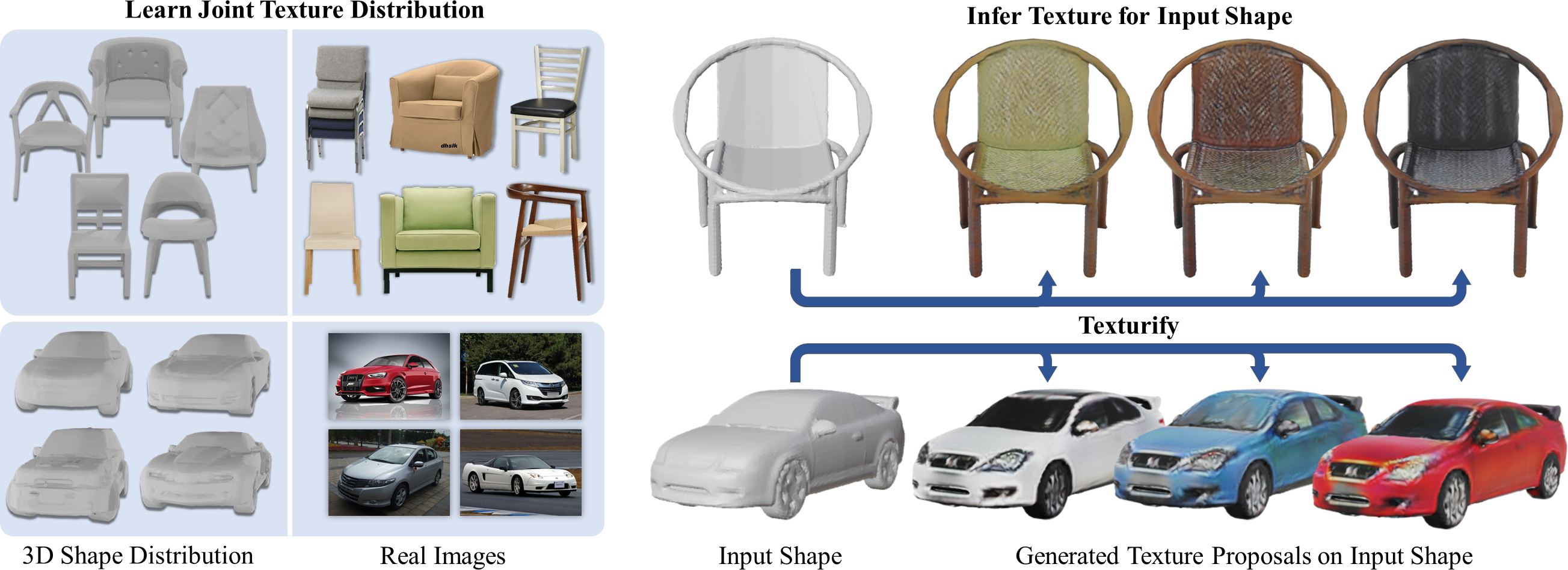

Texture cues on 3D objects are key to compelling visual representations, with the possibility to create high visual fidelity with inherent spatial consistency across different views. Since the availability of textured 3D shapes remains very limited, learning a 3D-supervised data-driven method that predicts a texture based on the 3D input is very challenging.

We thus propose Texurify, a GAN-based method that leverages a 3D shape dataset of an object class and learns to reproduce the distribution of appearances observed in real images by generating high-quality textures.

In particular, our method does not require any 3D color supervision or correspondence between shape geometry and images to learn the texturing of 3D objects. Texurify operates directly on the surface of the 3D objects by introducing face convolutional operators on a hierarchical 4-RoSy parameterization to generate plausible object-specific textures. Employing differentiable rendering and adversarial losses that critique individual views and consistency across views, we effectively learn the high-quality surface texturing distribution from real-world images.

Experiments on car and chair shape collections show that our approach outperforms state of the art by an average of 22% in FID score.

The texture latent space learned by our method produces smoothly-varying valid textures when traversing across the latent space for a fixed shape.

Start Frame

End Frame

Start Frame

End Frame

The learned latent space is consistent in style across different shapes, i.e. the same code represents a similar style across shapes, and can be used, for example, in style transfer applications.

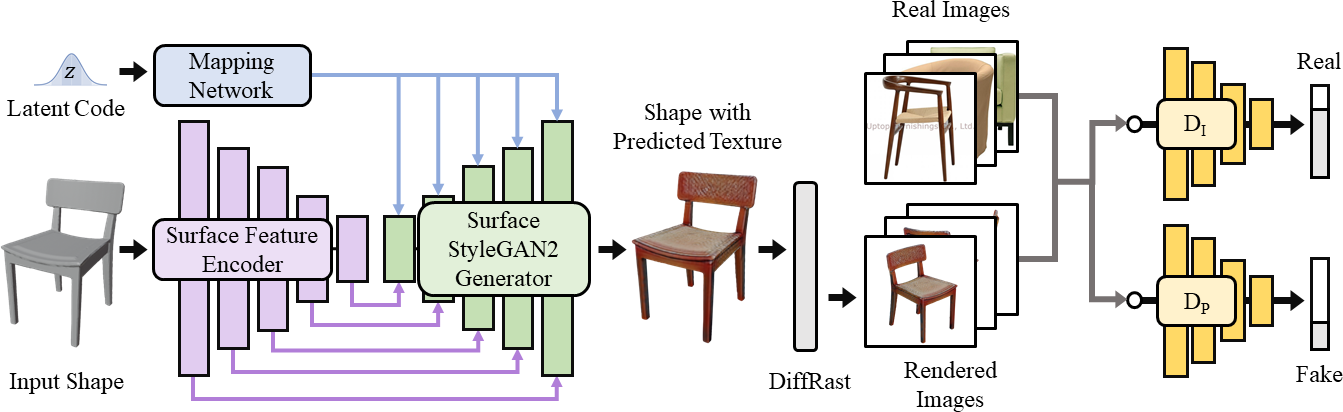

Surface features from an input 3D mesh are encoded through a face convolution-based encoder and decoded through a StyleGAN2-inspired decoder to generate textures directly on the surface of the mesh.

To ensure that generated textures are realistic, the textured mesh is differentiably rendered from different view points and is critiqued by two discriminators.

An image discriminator DI operates on full image views from the real or rendered views, while a patch-consistency discriminator DP encourages consistency between views by operating on patches coming from a single real view or patches from different views of rendered images.

For more work on similar tasks, please check out

Learning Texture Generators for 3D Shape Collections from Internet Photo Sets learns a texture generator for 3D objects from 2D image collections using a common UV parameterization.

Texture Fields: Learning Texture Representations in Function Space learns a texture generator parameterized as an implicit field.

SPSG: Self-Supervised Photometric Scene Generation from RGB-D Scans tackles scene completion both in terms of geometry and texture using a 3D grid parameterization.

Text2Mesh: Text-Driven Neural Stylization for Meshes optimizes color and geometric details over a variety of source meshes, driven by a target text prompt.

TextureNet: Consistent Local Parametrizations for Learning from High-Resolution Signals on Meshes, our motivation for using 4-RoSy parameterization, introduces a neural network architecture designed to extract features from high-resolution signals associated with 3D surface meshes, and show its application on point cloud segmentation.

@inproceedings{siddiqui2022texturify,

author = {Yawar Siddiqui and

Justus Thies and

Fangchang Ma and

Qi Shan and

Matthias Nie{\ss}ner and

Angela Dai},

editor = {Shai Avidan and

Gabriel J. Brostow and

Moustapha Ciss{\'{e}} and

Giovanni Maria Farinella and

Tal Hassner},

title = {Texturify: Generating Textures on 3D Shape Surfaces},

booktitle = {Computer Vision - {ECCV} 2022 - 17th European Conference, Tel Aviv,

Israel, October 23-27, 2022, Proceedings, Part {III}},

series = {Lecture Notes in Computer Science},

volume = {13663},

pages = {72--88},

publisher = {Springer},

year = {2022},

url = {https://doi.org/10.1007/978-3-031-20062-5\_5},

doi = {10.1007/978-3-031-20062-5\_5},

timestamp = {Tue, 15 Nov 2022 15:21:36 +0100},

biburl = {https://dblp.org/rec/conf/eccv/SiddiquiTMSND22.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}